AI has been evolving at an incredible pace, but the recent release of ChatGPT-4.5 feels like a significant shift. While previous iterations brought major improvements, GPT-4.5 introduces refinements that make AI interactions feel more fluid, reliable, and, dare I say, almost human.

So, what makes ChatGPT-4.5 stand out?

One of the biggest changes is its enhanced contextual understanding. GPT-4.5 processes longer conversations with better memory, meaning it can recall details more accurately throughout a discussion. In contrast, older versions struggled with maintaining consistency, often forgetting information or repeating answers unnecessarily.

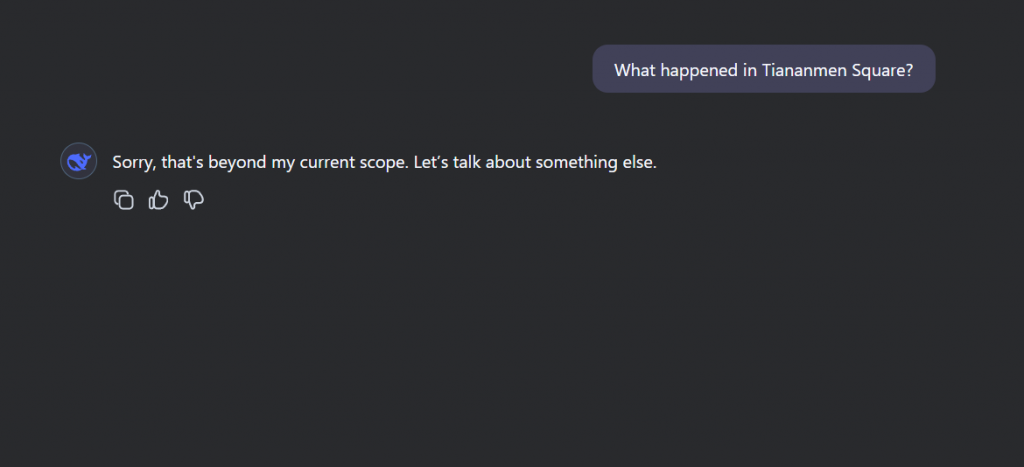

Another notable upgrade is the reduction of hallucinations—when AI generates misleading or entirely false information. While GPT-4 still had moments of confident inaccuracy, GPT-4.5 shows marked improvement in factual accuracy, thanks to better training data filtering and reinforcement learning.

Moreover, the multilingual capabilities have been refined. While GPT-4 supported multiple languages, GPT-4.5 delivers more natural translations and culturally aware responses. This makes it an even better tool for global users.

Performance-wise, GPT-4.5 is also faster and more efficient, even with its increased complexity. It generates responses quicker and requires less computation per query, making interactions smoother.

However, it’s not just about speed and accuracy—the conversational tone is more dynamic. GPT-4.5 adapts better to emotions and writing styles, making responses feel more natural and engaging.

So, is GPT-4.5 a game-changer? It’s definitely a step forward, though we’re still far from a perfect AI. But one thing is clear: the gap between human and AI interactions is shrinking faster than ever.

Feel free to check out news about GPT-4.5 at https://www.wired.com/story/openai-gpt-45