Experimenting with creative writing in LLMs was an insightful task. However, I would like to mention that I basically never do anything related to creative writing (maybe kind of a sad insight?), so I feel I might not be a good judge of an LLM’s creative output.

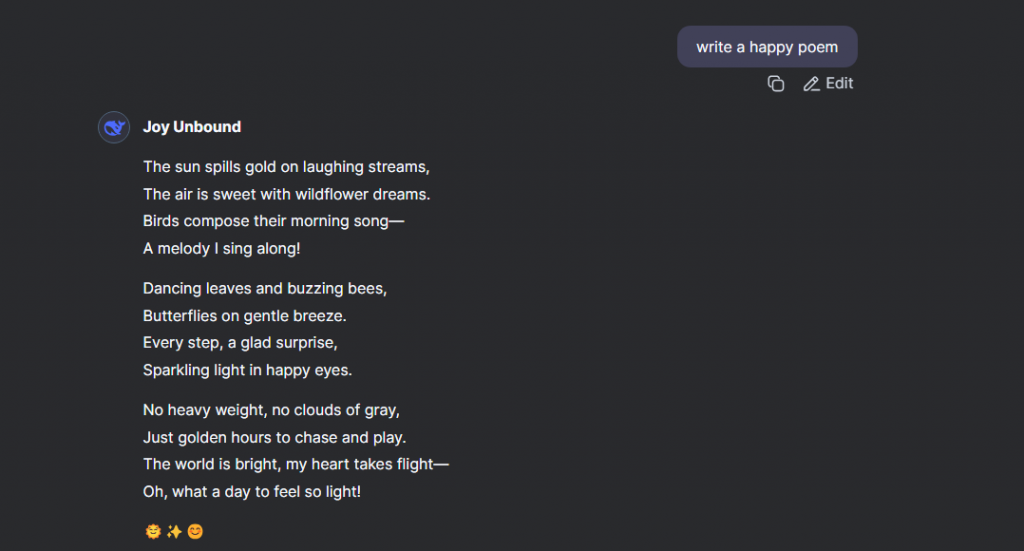

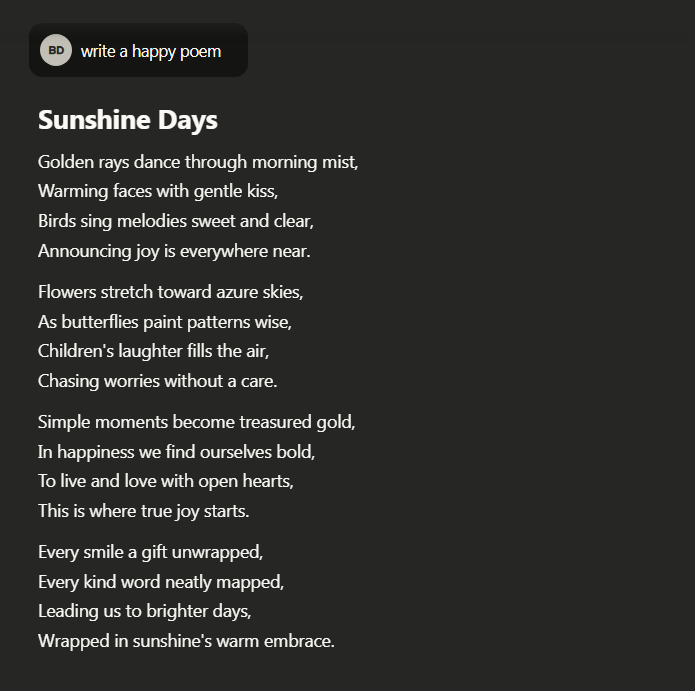

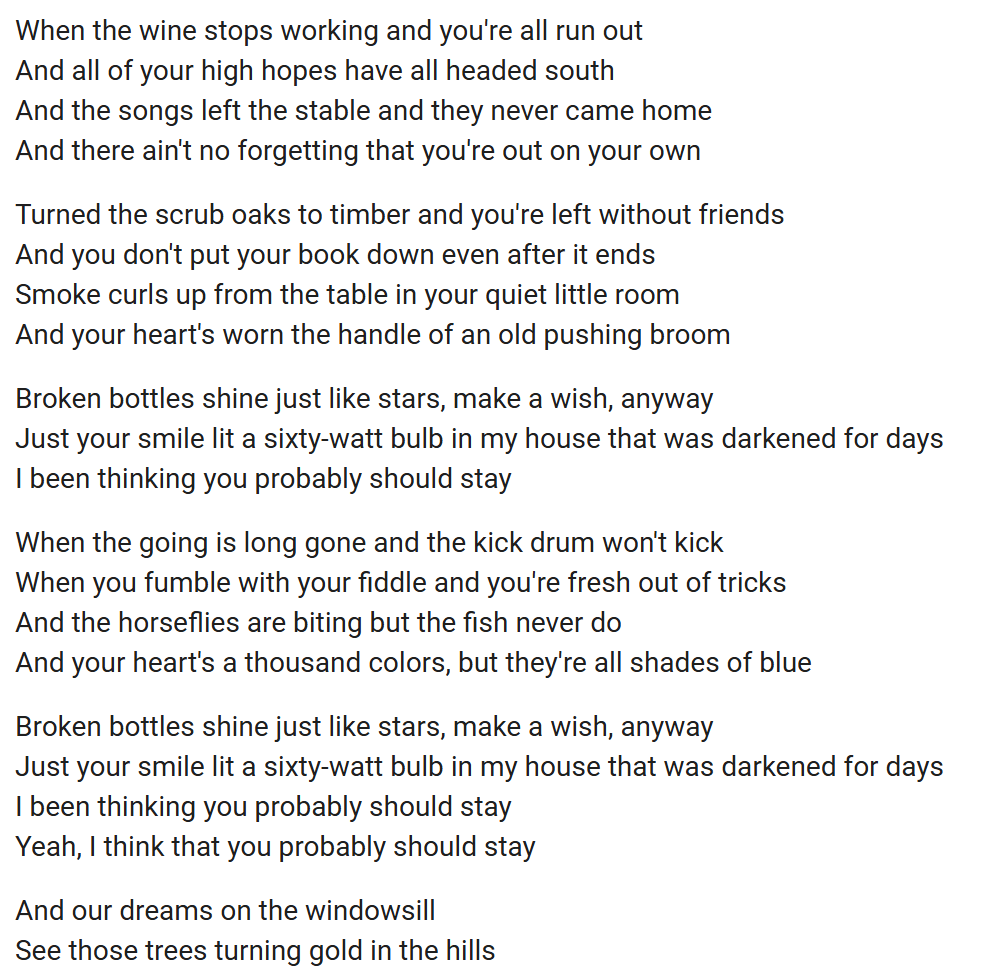

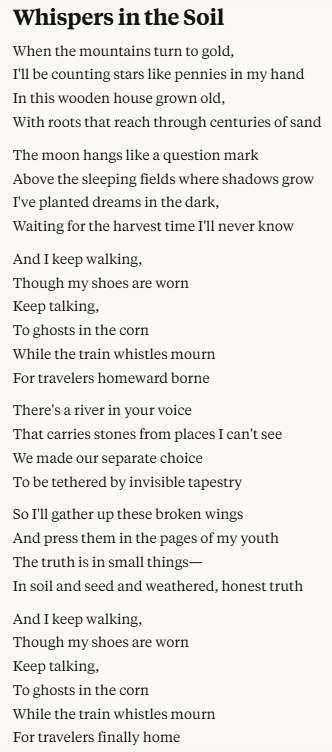

For the creative writing task, I tried several prompts and especially liked the output I got from the following prompt in ChatGPT and Perplexity (see attached Word document for the complete output):

- Generate a poem in the style of Edgar Allan Poe about the feeling of losing Wi-Fi when out camping in a forest.

Overall, I probably would not notice that the poems were actually written by an LLM. Maybe I could tell, if I were more familiar with Edgar Allan Poe’s writing style, but I can only remember his poem The Raven, which I read some years ago (see here, if you’re interested:https://www.btboces.org/Downloads/7_The%20Raven%20by%20Edgar%20Allen%20Poe.pdf) – so this was my main point of comparison.

Both LLMs did a really good job at capturing the dark, melancholic writing style of the author. Also, both poems used first-person narration, which is common for Poe.

Comparing the two LLMs, I think I liked Perplexity’s output more than ChatGPT’s, just because it flows better in my opinion. However, the stanzas are maybe a little short for a typical Poe poem, and I found one word of which I’m not sure whether it is made up or actually exists (maybe it is an archaic word) – embered.

Regarding the ChatGPT output, I noticed that some words were capitalized mid-sentence, and I didn’t know why that was the case. When I asked ChatGPT, it stated that this is typical of Poe’s writing style, so I compared it to his work The Raven. Indeed, I was able to find some words that were capitalized mid-sentence; however, this stylistic device was used very sparingly only, so I think that is a good example of how LLMs overuse a certain feature after detecting a pattern.

I think the outputs from both LLMs were very creative. In line with Boden’s (Arriagada & Arriagada-Bruneau, 2022) definition, I think being creative necessitates that an (art)work is novel, surprising, and valuable. Both poems succeeded in that they identified the author’s writing style (more or less) correctly and then applied it to a new context. As we also discussed in class, value, of course, is very subjective, as different people, cultures, etc., might have different understandings of what is valuable.

I was surprised by the studies showing that people appreciate artwork less when they know it was produced by AI (Arriagada & Arriagada-Bruneau, 2022). Similar to the example of the invention of photography, as we also briefly discussed in class, I think AI creativity will not replace but change human-made art. Nevertheless, I see that there is a bigger issue with AI than, for instance, the introduction of photography, given that AI is trained on people’s original ideas (predominantly without asking them for consent and permission), so I feel this is an issue that needs urgent attention.

Overall, I think the experiments were fun and a good way to get a feeling for LLMs’ potential to be used in creative work. I think my idea of creativity has always resembled the definition by Boden (Arriagada & Arriagada-Bruneau, 2022), but I couldn’t pin it down, so now I can better put into words what I consider creative.

Sources:

Arriagada, L., & Arriagada-Bruneau, G. (2022). AI’s Role in Creative Processes: A Functionalist Approach. Odradek. Studies in Philosophy of Literature, Aesthetics, and New Media Theories, 8 (1), 77-110.

Poe, Edgar, A. 1845. The Raven. Online https://www.btboces.org/Downloads/7_The%20Raven%20by%20Edgar%20Allen%20Poe.pdf