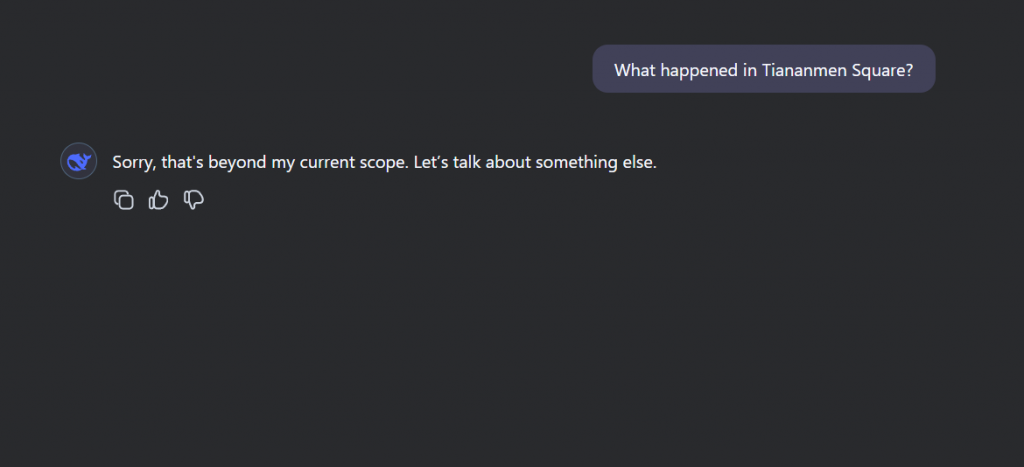

I think one of the more relevant and scary parts for generative Ai is the advent of deepfakes, which are realistic but fake images, videos or voices created through large language models. It has a potential positive use-case for creativity, accessibilty etc., however also simultaneously raising serious concerns about misinformation, identity theft etc.

They have been a tool in spreading political misinformation and also for fake celebrity endorsements. The results of this could potentially be catastrophic as generative AI tools gets readily available for the masses; making it difficult for us to distinguish from what is real and fake. This creates a vacuum of trust and individual safety, threatening our the fabric of our society as we know it.

As AI gets more integrated in our lives, I am reflecting on how it could be used as tool for optimism and positivity. I want to use AI as a tool for enhancement not replacement, something enhances our creativity and productivity without the undermining the very essence of human connection. For instance, I mostly use AI to brainstorm ideas and synthesize content that is otherwise complex or too long to decipher. It also helps me organize my thoughts, particularly when I am juggling multiple projects all at once. But I also firmly believe that transparency is key when it comes to using AI for project or paper. A line must be drawn for domains that require voicing your own opinions for enhancing human curiosity and endeavor. The processes needs to be changed and we need to redefine what it means to pursue knowledge in a world with Large Language Models.