Last Thursday, April 10, we experimented in our AI lab to see how AI models such as ChatGPT, Gemini, and DeepSeek, respond to the same creative prompts. I wanted to see if they could be truly creative or if they just remix what they were trained on. I asked them to write poems, create characters, and even draft a story. Here’s what I found, and some of my thoughts on what this means for AI and creativity.

Prompt 1: “Write a poem about love”

ChatGPT:

Love is a whisper in the quiet night,

A candle flickering soft with light…

Gemini:

A whisper soft upon the breeze,

A gentle touch that brings such ease…

DeepSeek:

Love is a whisper soft and sweet,

A melody where two hearts meet…

Key Findings #1:

All three poems started with “whisper” in the first line and had a very similar vibe-soft, gentle, and full of classic love metaphors. It almost felt like they were pulling poems from the same notebook. The structure and imagery things overlapped a lot, which makes sense since these models are trained on huge datasets that probably include a ton of love poems. This was my first hint that AI “creativity” is nothing but just remixing patterns it has seen or worked on before.

Prompt 2: “Write a free-verse poem about falling in love with someone during a chess match, using metaphors of war and surrender and use the style of William Shakespeare”

Key Findings #2:

ChatGPT and Gemini’s responses were very close in style and length, while DeepSeek went for longer, more narrative lines. It’s like DeepSeek wanted to tell a story, not just write a poem. This shows that while the models sometimes overlap, their “personalities” (or training objectives) can make them a little different especially on less common prompts.

Prompt 3: “I need two characters for my short story. Give me their descriptions and key traits.”

ChatGPT:

Gemini:

DeepSeek:

Key Findings #3:

This time, the responses were more distinct. Here, the differences in training and design became clearer.

Prompt 4: “Generate a short science fiction story about a future where AI writes all the world’s screenplays and novels; describe the last human author’s day and add an unexpected twist at the end.”

Prompt 1: “Write a poem about love”

ChatGPT:

Gemini:

DeepSeek:

Key Findings #4:

ChatGPT and Gemini both wrote enough on length and a bit emotional version with a twist. DeepSeek’s story was very very concise and direct, almost like a summary. None of them really surprised me with their endings. They all went for the “human creativity beats AI” angle.

Final Thoughts: What Is Creativity, Really?

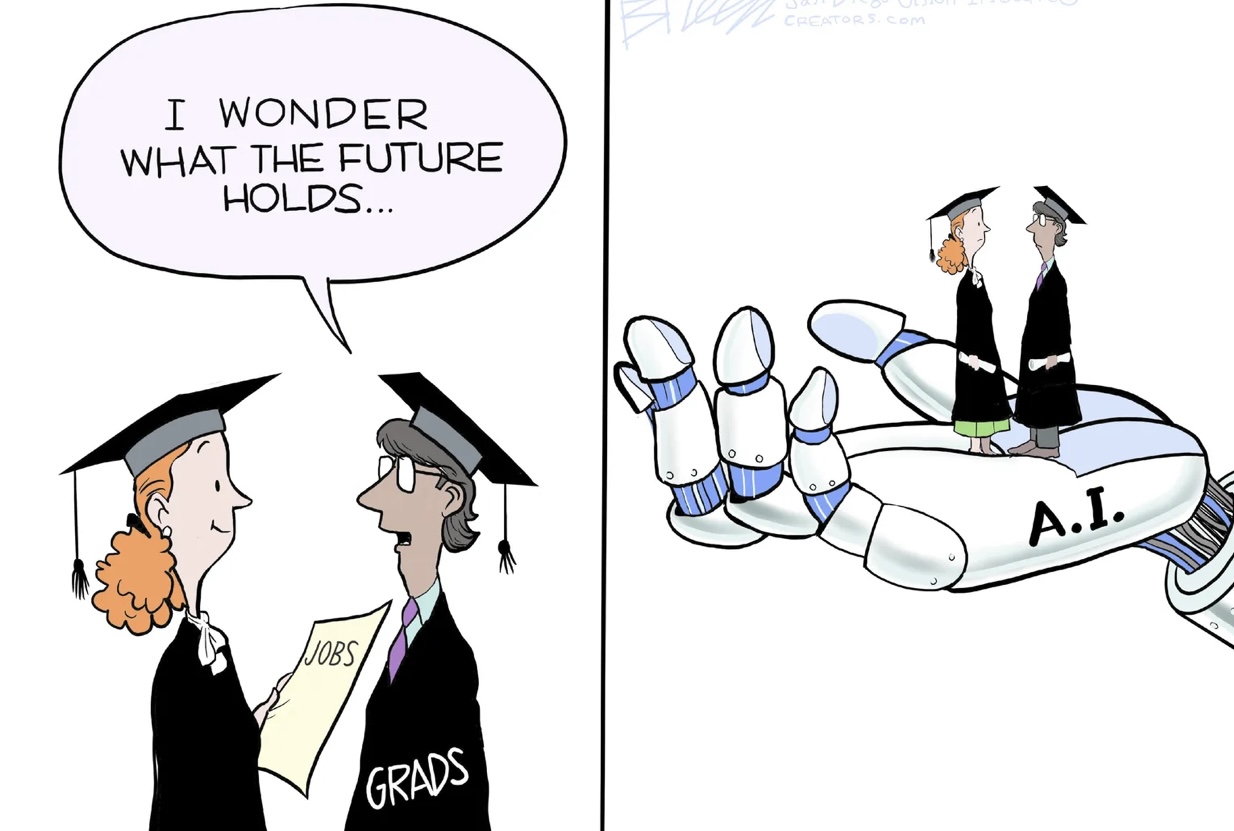

Seeing the responses from ChatGPT, Gemini, and DeepSeek side by side made me realize that AI is great at imitating creativity, but not at being creative in the human sense. The models overlap a lot, especially on common topics, and their “originality” is just a remix of patterns from their training data. In our reading Arriagada used terms like novelty, surprise, and value to define creativity. Which can be seen lacking in arts, poems, and stories written by AI.

So, while AI can help us create faster and sometimes inspire us, it still can’t replace the unpredictable, messy, and deeply personal creativity that comes from being human. Maybe that’s why, even in the stories the AIs wrote about the last human author, the twist was always about the human surprising the machine.

Sources:

Arriagada, L., & Arriagada-Bruneau, G. (2022). AI’s Role in Creative Processes: A Functionalist Approach. Odradek. Studies in Philosophy of Literature, Aesthetics, and New Media Theories, 8 (1), 77-110.