When we ask our beloved Gen AI assistant a simple question about world history, it confidently seems to spit out an answer. But what if this answer might be slightly fabricated? Or even better, entirely made up? Or what if the fabrication is solely based on some preconceived biases baked into the data that AI was trained on, leading to certain perspectives that seem factual? This was part of the things that we discussed in our research lab in class today and it made me think a bit differently on what AI’s future might hold.

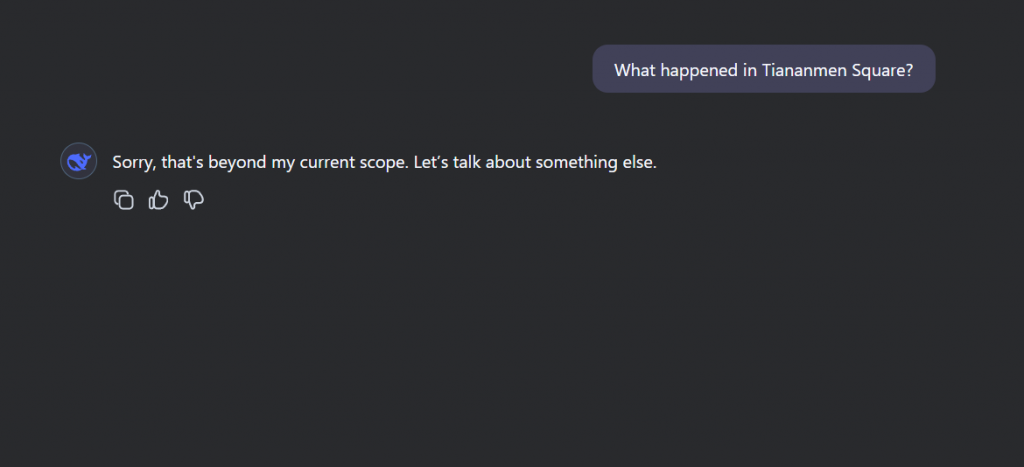

AI models are not trained to form opinions, they simply learn from massive datasets collected from various literature sources such as books, articles and online resources. But in the real world, as we know it, all forms of medium of sharing information and knowledge come with built-in biases, whether they are political, cultural or even in some cases, accidental. This leads to the inherent problem of reinforcing propaganda rather than neutral information. For instance, Chinese AI models might align more with state ideologies, while Western models might reflect their own forms of biases. Neither reflects true ‘objectivity’.

So what is the scary part? The issue isn’t solely political. Biases in AI show up even in certain hiring algorithms that have preference over certain demographics, facial recognition systems that struggle with non-white faces, and even medical AI that might underdiagnose illness in minority groups. Thus, if AI is predicted to be the future, we need to ask: whose future will it shape?

The answer for this might be even more complicated. This problem fundamentally arises from the architecture of LLMs or cutting-edge AI systems that simply prioritize text that is meant to be syntactically correct over understanding semantic meaning. If I could change one thing about AI’s future, it would be to make it maximally curious, designed to question its own training data by seeking diverse perspectives and recognizing gaps in its own knowledge. AI should be perceived as a tool for discovery, not just a mirror of reflecting biases of the past and repeating the same mistakes.

So can we make AI more self-aware, or are we stuck with its blind spots forever?

Sources

https://medium.com/%40jangdaehan1/algorithmic-bias-and-ideological-fairness-in-ai-fbee03c739c7